In partnership with:

In Today’s Issue:

⚔️ Anthropic’s new mid-tier model hits "Opus-level" performance in coding and agentic planning

🏛️ Andrew Yang warns that millions of mid-career roles will be "vaporized"

👤 A newly granted patent reveals Meta’s designs for an AI that "simulates" users after death

🎓 Khan Academy CEO calls for a 1% corporate profit tax to fund a massive "reskilling infrastructure"

✨ And more AI goodness…

Dear Readers,

The AI job market just got its starkest wake-up call yet - Salman Khan warns that even a 10% drop in white-collar roles could feel like an economic depression, while Andrew Yang puts the timeline at just 12 to 18 months before millions of professional jobs vanish. Today's issue dives deep into both warnings and their implications for you. But disruption isn't the only story -

Meta just patented technology to keep your social media presence alive after you die, raising questions about digital immortality that sound like science fiction but are already in the patent office. On the brighter side, Anthropic's new Claude Sonnet 4.6 is collapsing the gap between frontier and mid-tier AI at a fraction of the cost, and Google researchers discovered that simply copy-pasting your prompt twice can boost AI accuracy from 21% to 97% - no extra cost, no setup, no catch. Add in a striking graph showing global uncertainty hitting an all-time high, a quote from an AI developer calling out Europe's innovation-killing regulatory culture, and Kim's (my :D) latest conversation with two professors on AI's societal impact - and you've got an issue packed with signals you can't afford to miss.

Let's dive in.

All the best,

🤖 AI Threatens White-Collar Jobs

Salman Khan, CEO of Khan Academy, warns the AI revolution could slam the U.S. job market faster than expected, even a 10% drop in white-collar roles could “feel like a depression.” With milestones like IBM’s Deep Blue beating Garry Kasparov, ChatGPT passing the bar, and Google DeepMind winning math gold, AI’s rapid progress is fueling fears of mass unemployment, potentially replacing up to 12% of the workforce, according to MIT.

Khan proposes a bold fix: a 1% corporate commitment to fund large-scale reskilling before automation - from driverless cars to AI-powered customer service - reshapes over 1 million gig and professional jobs. With 55,000 AI-linked layoffs already reported and unemployment at 4.3%, leaders are urged to act now before disruption accelerates.

🧠 Meta Patents Digital Afterlife AI

Meta has secured a patent for an AI system that could simulate a user’s social media presence - even after death - by replicating posts, chats, voice messages, and interactions using past data. The filing suggests advanced capabilities like AI-driven audio and video calls, effectively creating a digital persona powered by large language models. While Meta says there are “no plans” to launch it, the move underscores how fast AI identity tech is evolving - and raises big ethical questions about consent, legacy, and digital immortality.

⚠️ AI Layoffs Loom Large

Andrew Yang warns that AI could eliminate millions of white-collar jobs within 12–18 months, hitting mid-career professionals, managers, marketers, coders, and call center workers first. He argues companies will aggressively cut headcount to please markets, creating a domino effect that could ripple into local economies - from dry cleaners to dog walkers - as consumer spending drops. With January layoffs already at their highest since 2009 and firms like Pinterest and HP citing AI-driven restructuring, Yang says the shift isn’t theoretical - it’s accelerating fast.

OpenClaw developer Peter Steinberger:

”In the USA, most people are enthusiastic. In Europe, I get insulted, people scream REGULATION and RESPONSIBILITY.

And if I really build a company here, then I get to struggle with things like investment protection laws, employee co-determination, and paralyzing labor regulations. At OAI, most people work 6-7 days a week and get paid accordingly. With us, that’s illegal.”

Kim was once again talking with two professors about AI and its current impact on our society. The conversation is in German, but you can turn on English subtitles if you like.

Sonnet Gets Smarter!

The Takeaway

👉 Claude Sonnet 4.6 matches Opus-class performance on key benchmarks - at Sonnet pricing ($3/$15 per million tokens), making high-capability AI dramatically more accessible for developers and enterprises alike

👉 Computer use is no longer experimental: early users report human-level performance on real tasks like navigating spreadsheets and filling multi-step web forms, with major prompt injection resistance improvements

👉 The 1M token context window enables whole-codebase reasoning in one shot - critical for long-horizon planning, contract analysis, and complex agentic workflows

👉 Developers preferred Sonnet 4.6 over the previous Opus 4.5 model 59% of the time in Claude Code - signaling that the frontier/mid-tier distinction is rapidly eroding

Frontier AI just got cheaper. Anthropic's Claude Sonnet 4.6 is here, and it's rewriting the rules of what a mid-tier model can do, delivering performance that previously required their most powerful Opus-class models, at a fraction of the cost.

So what makes Sonnet 4.6 special? It's a full upgrade across coding, computer use, long-context reasoning, and document analysis. Developers testing it in Claude Code preferred it over the previous Sonnet 70% of the tim, and even chose it over the older Opus 4.5 model 59% of the time. That's not an incremental improvement. That's a generational leap.

For the AI community, this matters enormously. The gap between "smart enough" and "frontier-level" is collapsing fast. Sonnet 4.6 now ships with a 1 million token context window - enough to load entire codebases into a single prompt - and handles complex agentic tasks that once demanded expensive compute.

Why it matters: Sonnet 4.6 makes frontier-level AI accessible at mainstream pricing, fundamentally changing the cost-performance calculus for developers and enterprises. It signals that the era of "pay more for intelligence" may be ending - capable AI is becoming the default, not the premium.

Sources:

🔗 https://claude.com/blog/improved-web-search-with-dynamic-filtering

Stop Updating Your CRM. Let It Update Itself.

If you're a founder doing 10+ customer calls a week, you already know the tax: manual notes, stale fields, follow-ups that fall through the cracks, and a Sunday night spent trying to remember who asked for what.

Lightfield is an AI-native CRM built to eliminate all of it.

👉 Connects to Gmail and calendar — automatically creates accounts, contacts, and opportunities from your actual conversations

👉 Every email and meeting is captured, searchable, and stitched into a single customer history

👉 Ask it anything: "What features keep coming up?" "Which deals went cold after my last email?" "Who should I follow up with this week?"

👉 Schema-less architecture means you start on day zero, no setup, no configuration — and backfill up to 2 years of history automatically

Founders switching from HubSpot and Attio tell us the same thing: they were spending more time maintaining their CRM than selling. Lightfield flips that.

The World reaches highest level of uncertainty in history, surpassing Covid, the Global Financial Crisis, and the Dot Com Bubble.

Repeat Your Prompt, Get Better AI

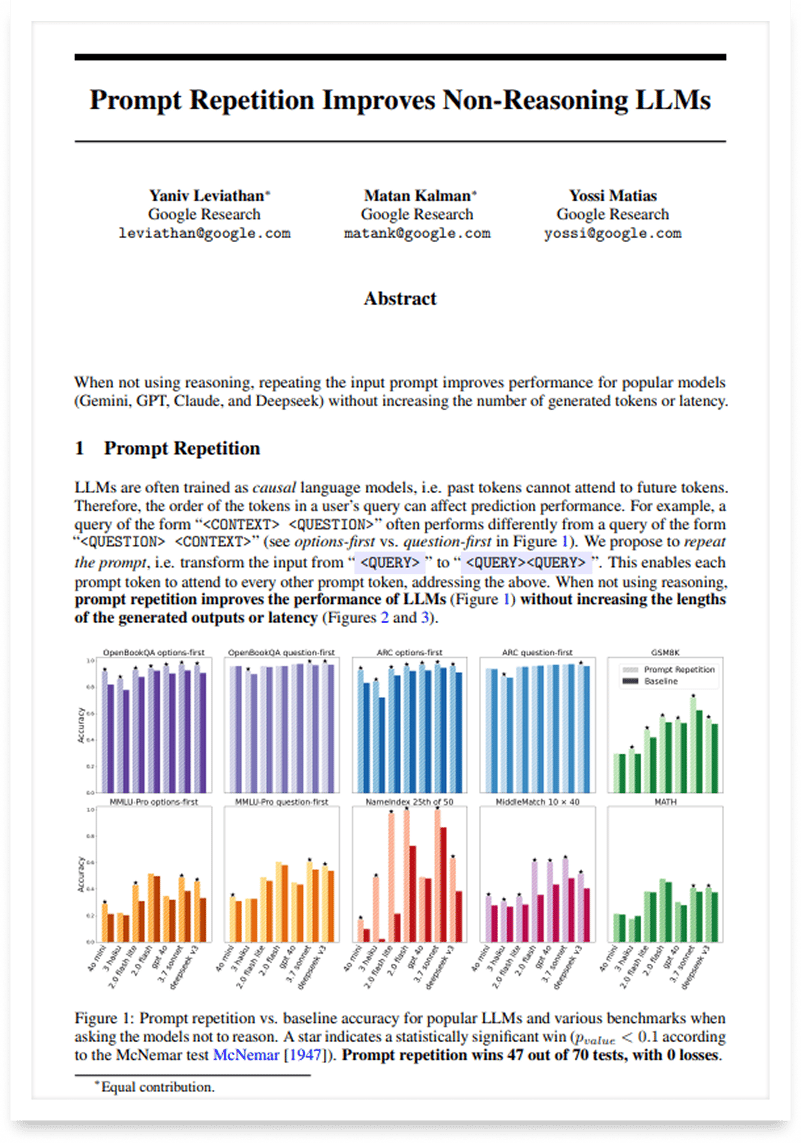

Copy-paste your prompt twice - and watch your AI get smarter. That's the surprisingly simple finding from a new Google Research paper by Yaniv Leviathan, Matan Kalman, and Yossi Matias. The trio - already famous for inventing speculative decoding - discovered that simply repeating the entire input prompt before sending it to an LLM dramatically improves accuracy across the board.

The reason is structural: LLMs read left to right. Early tokens in your prompt never "see" what comes later. By repeating the prompt, every token gets a second pass where it can attend to the full context. The team tested this across seven models - Gemini, GPT-4o, Claude, and DeepSeek - on seven benchmarks. Prompt repetition won 47 out of 70 tests, with zero losses. One model jumped from 21% to 97% accuracy on a name-lookup task. The kicker? No extra output tokens, no added latency, no finetuning required. The repetition happens during the parallelizable prefill stage, making it essentially free. When reasoning is already enabled, the effect becomes neutral — because reasoning models already learned to repeat parts of the prompt internally.

This is possibly the lowest-effort performance boost available for non-reasoning LLM use cases today. It requires zero infrastructure changes and works as a universal drop-in improvement across all major model providers.

The best marketing ideas come from marketers who live it. That’s what The Marketing Millennials delivers: real insights, fresh takes, and no fluff. Written by Daniel Murray, a marketer who knows what works, this newsletter cuts through the noise so you can stop guessing and start winning. Subscribe and level up your marketing game.